AI-Powered Key Takeaways

Introduction

Functional testing confirms that every feature behaves the way users expect. It checks if flows work, inputs are handled correctly, and outputs match what the product team intended. These tests shape the core experience. When they are done poorly, issues reach production and affect users.

Many teams still repeat avoidable mistakes during functional testing. These gaps slow releases, create unnecessary rework, and leave defects unnoticed.

Common Functional Testing Mistakes

1. Testing Without Clear Requirements

Test cases become vague when product requirements are unclear or incomplete. Teams rely on assumptions and personal interpretation. This creates inconsistent results because testers cannot verify the correct behaviour.

How to avoid it

Make sure requirements, expected outputs, and acceptance criteria are documented before test creation. Every test should map to a specific requirement to avoid confusion about what is being validated.

2. Relying Too Much on UI Tests

UI tests are helpful but slow, brittle, and sensitive to layout changes. Dependence only on UI-level checks hides deeper issues in logic, workflows, and API responses.

How to avoid it

Balance coverage. Include API tests, integration tests, and workflow tests that validate core logic. Use UI tests only for user-facing verification, not for every small rule or condition.

3. Ignoring Edge Cases

Many teams only test the “happy path”. Real users do not follow perfect behaviour. Edge cases, invalid data, empty fields, and interrupted flows expose real functional bugs.

How to avoid it

Include tests that show how the app behaves when users make mistakes or when something breaks in the middle of a flow. For example, check what happens when someone enters an incorrect OTP, leaves a required field empty, or loses connection during a payment. These situations help you see issues that never appear in the clean, ideal path.

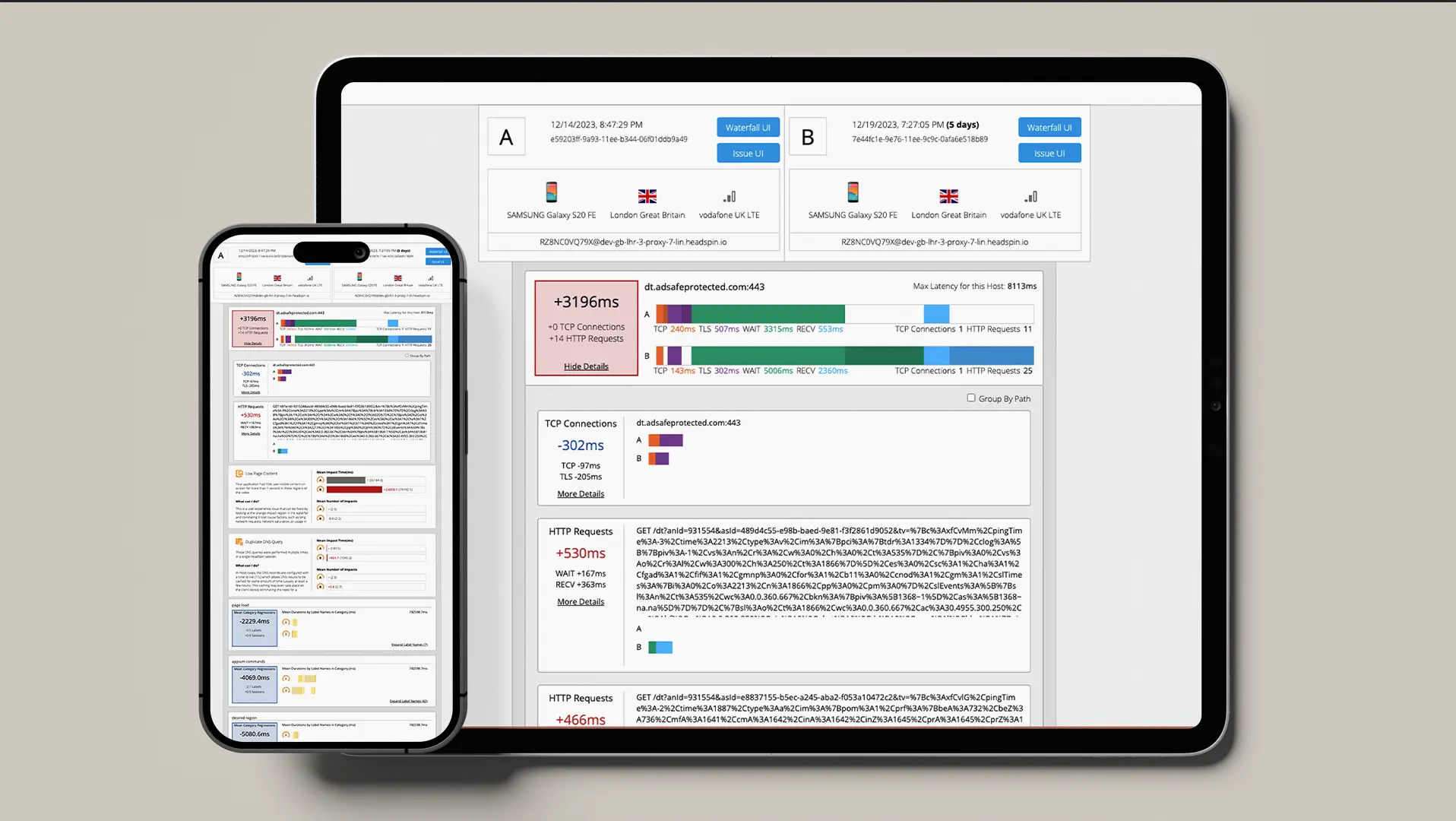

4. Not Testing Across Real Environments

Testing on only one device, one browser, or one OS version hides issues that appear in real-world use. Functional behaviour changes when device capabilities, browser engines, or OS updates differ.

How to avoid it

Run functional tests across multiple devices, browsers, and environments. Use real devices whenever possible for more reliable results. Avoid assuming that one environment covers all.

5. Writing Tests That Are Too Complex

Overly complicated test cases confuse teams and lead to incorrect execution. Long steps, unclear actions, and mixed objectives make results unreliable.

How to avoid it

Write simple tests. One flow per test. Clear steps. Clear expected result. Anyone should be able to run the test without asking for clarification.

6. Poor Test Data Management

Functional tests fail for the wrong reasons when data is not controlled. Shared test accounts, missing cleanup steps, or outdated data cause inconsistent results.

How to avoid it

Use clean, isolated and synthetic data similar to real user data. Reset or refresh data for every test cycle. Keep separate data pools for positive and negative cases.

7. Skipping Regression Testing

Teams often test only new features. Older flows break because changes affect existing logic. Missing regression checks allow bugs to remain hidden.

How to avoid it

Maintain a reliable regression suite that covers critical flows. Run it before each release. Update it whenever a feature changes.

8. Not Automating Repetitive Tests

Manual testing of routine flows slows teams down. It also increases the chance of missed steps or human errors.

How to avoid it

Automate repetitive functional checks such as login, search, and form submissions. Keep manual testing for new features and exploratory reviews.

9. Missing Validation for Third-Party Dependencies

External APIs, SDKs, payment gateways, and identity services affect many functional flows. Teams often assume these integrations work without fully verifying them.

How to avoid it

Write tests that include dependent services. Validate responses, delays, and edge-case behaviours. Monitor failures caused by third-party services.

Final Thoughts

Functional testing protects the core experience. It confirms that every key flow works the way users expect. Most functional testing mistakes happen because teams skip foundational steps such as clear requirements, balanced coverage, clean data, and simple test design.

Avoiding these mistakes allows teams to catch issues earlier, maintain consistent releases, and improve product quality.

Build Stronger Functional Tests With HeadSpin! Connect With HeadSpin Experts

FAQs

Q1. What is the main purpose of functional testing?

Ans: Functional testing checks whether each feature works as intended. It validates inputs, outputs, and expected behaviours across user flows.

Q2. Why do functional tests fail even when the feature works?

Ans: Failed tests often come from unclear requirements, flakiness (unexpected screens), poor test data, or incorrect test design rather than actual product issues.

Q3. How much UI testing is enough?

Ans: UI testing should focus on visible user interactions. Core logic, validations, and rules are better tested through API and integration checks.

Q4. What is the biggest mistake in functional testing?

Ans: The most common mistake is relying on assumptions instead of documented requirements. This creates inconsistent and unreliable tests.

.png)

-1280X720-Final-2.jpg)