AI-Powered Key Takeaways

Introduction

QA teams test software based on how it behaves when it is used. At release time, the focus is on whether key actions work as expected and whether the system responds correctly to real inputs.

When that behavior changes or breaks, the impact is immediate, regardless of what was modified internally.

To evaluate this reliably, QA teams test software focusing only on what the system accepts as inputs and what it returns. This approach is known as black box testing.

The sections below explain the different types of black box testing, the tools used, and how QA teams apply it in real testing scenarios.

What are the Different Types of Blackbox Testing?

Functional testing

Functional testing checks whether application features work as expected. Test cases are created from requirements and executed through the user interface or exposed APIs.

The tester does not rely on internal code or logic. Inputs are provided and outputs are observed. A test passes if the observed behavior matches the expected result.

Non-Functional Testing

Non-functional testing evaluates how the system behaves under different conditions.

Performance Testing

Performance testing measures how the system responds during realistic usage conditions..Testers observe response time, failures, and timeouts based on how the application behaves from the client side.

Usability Testing

Usability testing focuses on ease of use. Testers observe whether users can complete tasks, understand system feedback, and move through workflows without confusion.

Accessibility Testing

Accessibility testing verifies whether the system can be used with assistive technologies. Validation is based on keyboard navigation, screen reader output, and visible focus behavior.

Regression testing

Regression testing re-executes the same externally defined test cases after updates. The system is treated as a closed unit, and only differences in observable behavior are evaluated.

QA teams:

- Re-run existing test cases that validate user-facing flows and externally visible behavior after each update

- Compare current results with earlier expected outcomes, based only on what the application returns or displays

- Detect regressions when a previously working flow starts failing, behaving differently, or producing unexpected results

How QA Teams Perform Black Box Testing

QA teams use black-box testing to validate software from a user’s perspective. The focus stays on inputs, outputs, and visible behavior, not on how the code is written. This makes the approach practical across products, teams, and release stages.

Validating Core User Workflows

Teams start by mapping critical user journeys such as sign-up, login, checkout, or data submission. Test cases are written around expected behavior:

- What input is provided

- What response or output should appear

- How the system should behave if the input is invalid

This confirms that key flows work as intended before and after every release.

Supporting Functional Testing Efforts

Black box testing plays a central role in functional testing. QA teams verify whether each feature meets stated requirements without inspecting internal logic. Common checks include:

- Whether a form rejects incorrect values

- Whether error messages appear at the right time

- Whether integrations return expected responses

Because this mirrors real usage, issues that unit-level checks miss are often detected.

How HeadSpin Helps:

HeadSpin supports functional validation by running these checks across device models, OS versions, and network profiles. This helps teams see where feature behavior changes across execution environments.

Enabling Efficient Regression Testing

As applications evolve, QA teams reuse black box test suites to confirm that new changes have not broken existing functionality. This is especially useful when:

- Features are updated frequently and previously working user flows need quick re-validation after each change

- Multiple teams contribute to the same codebase and changes in one area can affect visible behavior elsewhere

- Release cycles are short and there is limited time to re-test functionality manually before deployment

Automated black box tests are commonly added to CI pipelines and run on every build.

How HeadSpin Helps:

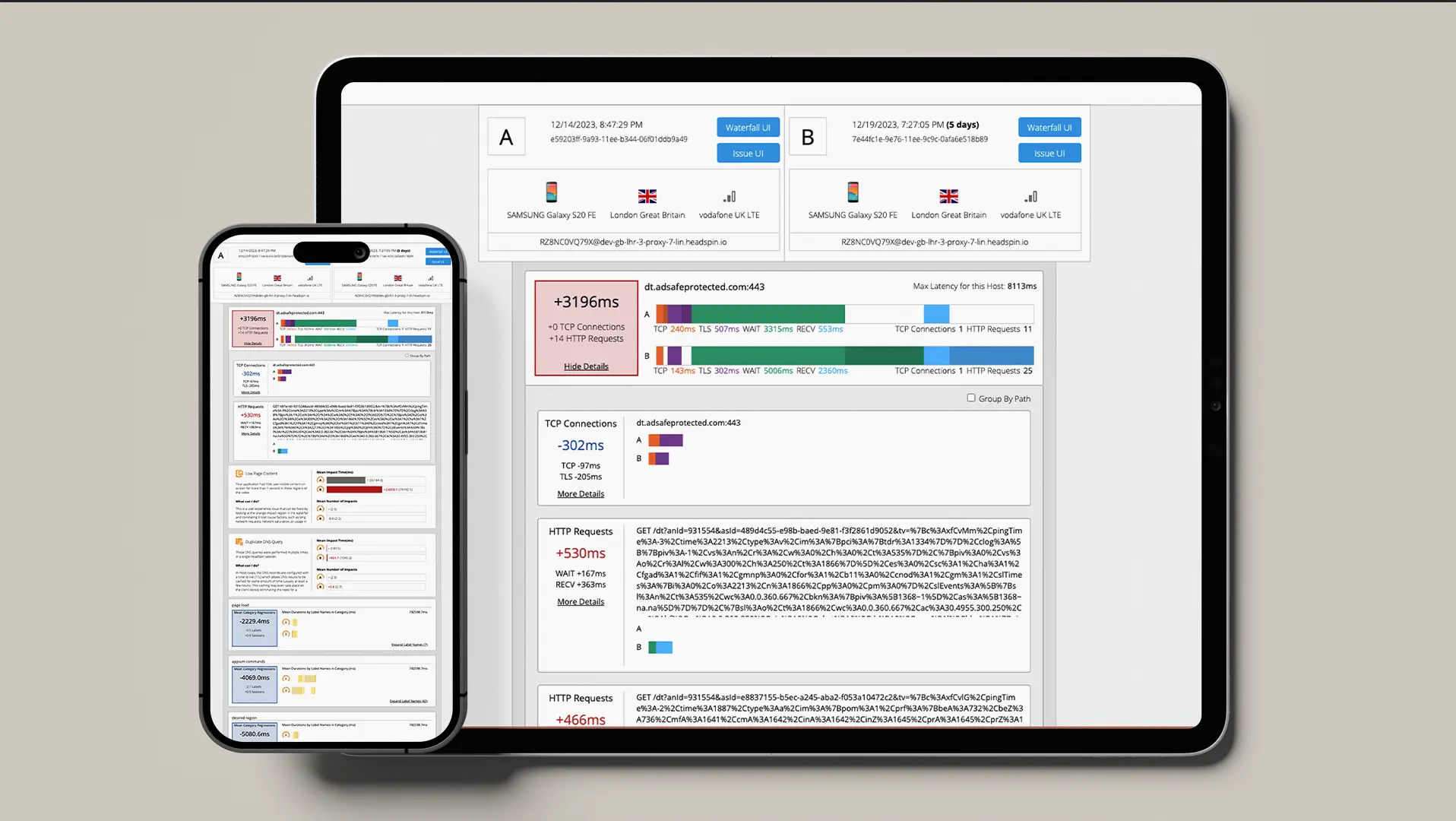

HeadSpin supports regression testing by running the same black box test suites across builds, devices, OS versions, and network conditions, while visually comparing performance KPIs across test runs in the HeadSpin interface. This helps teams identify regressions that appear only under specific conditions, such as a particular device model, OS version, or network.

Covering Non-Functional Behavior

Black box testing also applies to non-functional areas such as performance, usability, and compatibility. Examples include:

- Measuring response times under varying usage conditions

- Checking app performance across browsers, devices, or environments

- Validating error handling during network disruptions

How HeadSpin Helps:

HeadSpin provides visibility into user-observed performance characteristics such as page load times, device performance, and network conditions, all captured with precise time series view. This allows QA teams to evaluate how an application behaves under real usage conditions, rather than inferred metrics from internal logs.

Enabling Collaboration Across Roles

Because black box tests do not require knowledge of internal code, they are easier to share across QA, product, and business teams. Test cases can be reviewed by non-technical stakeholders, improving alignment on expected behavior.

How HeadSpin Helps:

HeadSpin supports collaboration through downloadable test reports that make black box test results easy to share across QA, product, and business teams. Issue cards give non-technical teams a clear view of reported issues, helping them understand impact and priority.

Technical teams can utilize time-series view of performance KPIs along with session recordings to see what occurred during the test run, where the issue appeared, and figure out how it affected the user flow. This shared visibility reduces back-and-forth between teams and helps align release decisions around what is observed during test sessions.

Way Forward

Upon implementing Blackbox testing teams, teams should aim to:

- Keep test coverage aligned with critical user flows as features evolve

- Reuse and refine regression suites instead of expanding test volume without purpose

- Pair functional checks with non-functional observations, such as response behavior and failure handling

- Run tests under consistent, repeatable conditions to spot build-over-build changes early

Platforms like HeadSpin support this direction by enabling teams to run tests across real devices, environments, and network conditions while maintaining continuity across releases. This helps QA teams focus less on recreating setups and more on interpreting user-visible results.

Black box testing works best when it runs in real environments. HeadSpin makes that possible. See HeadSpin in Action

FAQs

Q1. When should black box testing not be used?

Ans: Black box testing is not suitable when teams need to understand internal logic or data flow. It confirms that something failed, but not the underlying cause.

Q2. Is black box testing suitable for automation?

Ans: It works well for automation when tests are based on user-visible behavior. Tests that rely on internal UI structure tend to break more often.

Q3. How do teams choose what to test with a black box approach?

Ans: Teams usually focus on critical user flows that impact trust, revenue, or repeated usage. Less critical paths are tested less frequently.

.png)

-1280X720-Final-2.jpg)